UNDERSTANDING THE NEED FOR A SYSTEM LIKE KUBERNETES

- Let's take a quick look at how application development and deployment has changed in recent years:

MOVE FROM MONOLITHIC APPLICATIONS TO MICROSERVICES

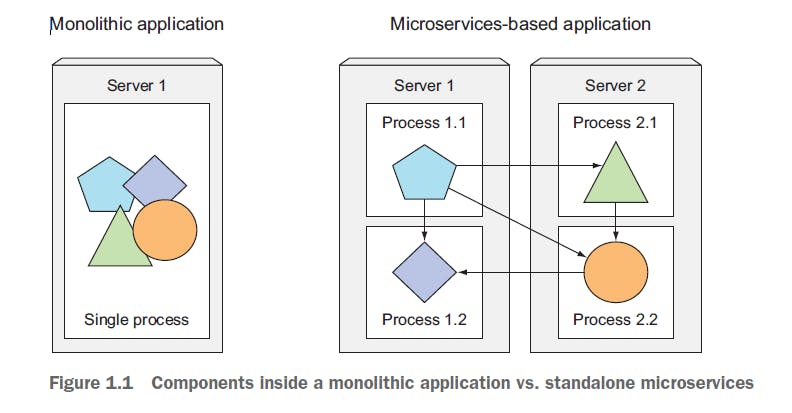

Monolithic applications are made up of components that are all tightly coupled and must be developed, deployed, and managed as a single entity because they all run as a single operating system process.

Changes to one part of the application require redeployment of the entire application, and over time the lack of hard boundaries between those parts leads to increased complexity and subsequent deterioration in quality. of the whole system due to the growing interdependencies between these parts.

Running a monolithic application usually requires a few powerful servers which can provide enough resources to run the application.

To cope with the increased loads on the system, you must either scale the servers vertically (Scale UP) by adding more processors, memory, and other server components.

or scale the entire system horizontally, setting up additional servers and running multiple copies (or replicas) of an application (scaling out).

Although Scale UP generally does not require any changes to the application, it becomes expensive relatively quickly and, in practice, always has an upper limit.

Scaling out, on the other hand, is relatively inexpensive on the hardware side, but may require significant changes in application code and is not always possible.

some parts of an application are extremely difficult or nearly impossible to scale horizontally (relational databases, for example).

If part of a monolithic application is not scalable, the whole application becomes non-scalable, unless you can split the monolith somehow.

MICROSERVICES APPLICATIONS DIVISION

These and other issues forced us to start breaking complex monolithic applications into smaller, independently deployable components called microservices.

Each microservice runs as an independent process and communicates with other microservices through simple and well-defined interfaces (APIs).

A modification of one of them does not require any modification or redeployment of another service, provided that the API does not change or changes only backwards compatible.

SCALE MICROSERVICES

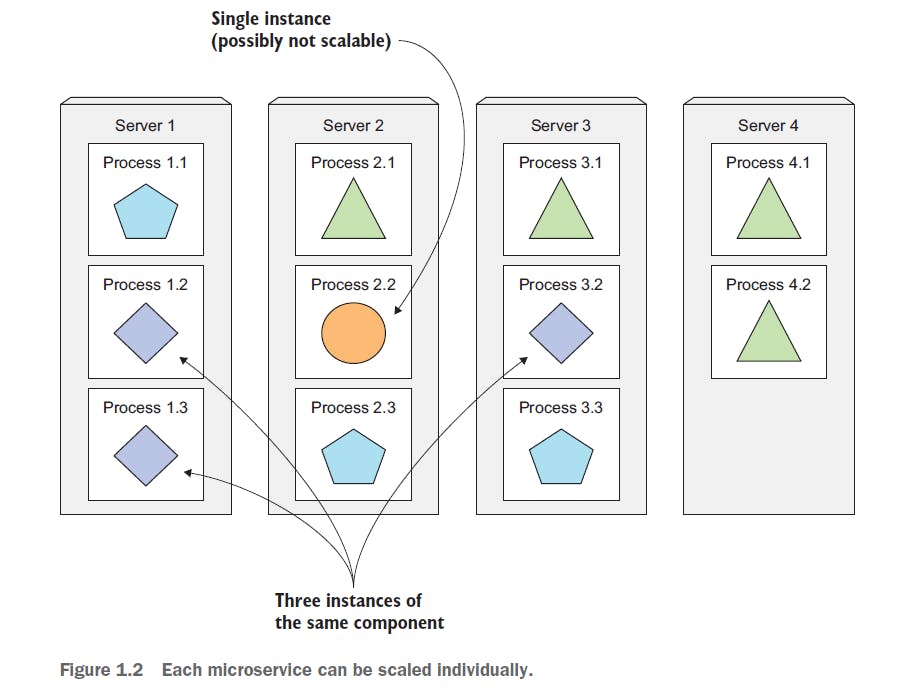

- Scaling microservices, unlike monolithic systems, where you have to scale the system as a whole, is done on a service-by-service basis, which means you have the ability to scale only the services that require more resources, while leaving others at their original scale.

DEPLOY MICROSERVICES

As always, microservices also have drawbacks.

As the number of these components increases, deployment-related decisions become increasingly difficult because not only do the number of deployment combinations increase, but the number of interdependencies between components increases even more.

Microservices do their work as a team, so they need to find each other and talk to each other.

When they are deployed, someone or something has to configure them all properly to allow them to work together as one system.

With the increase in microservices, this becomes tedious and error-prone, especially considering what ops/sysadmin teams should do in the event of a server failure.

Microservices also pose other challenges, such as difficulty in debugging and tracing runtime calls, since they span multiple processes and machines.

UNDERSTAND THE DIVERGENCE OF ENVIRONMENTAL REQUIREMENTS

The components of a microservices architecture are not only deployed independently, but are also developed that way.

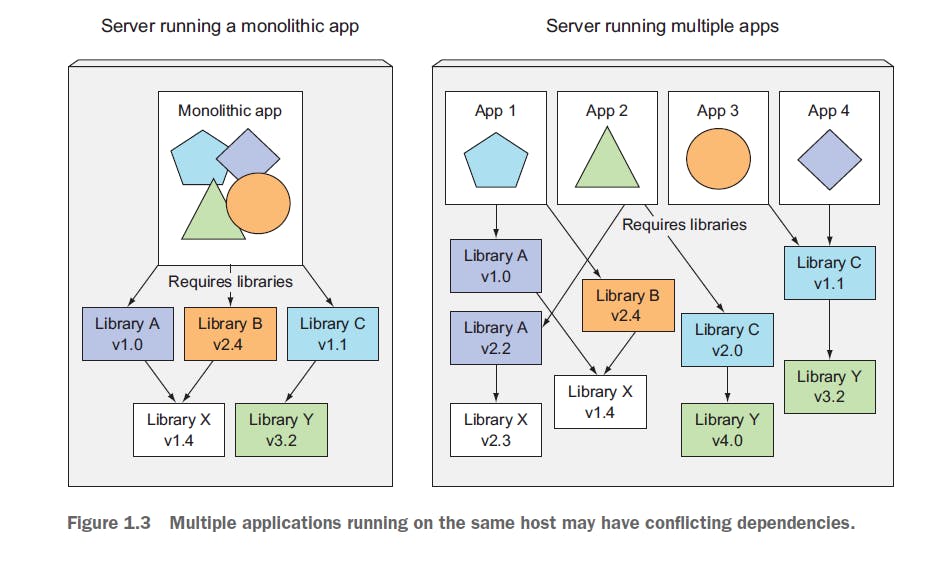

Due to their independence and the fact that it is common to have separate teams developing each component, nothing prevents each team from using different libraries and replacing them whenever the need arises.

Deploying dynamically linked applications that require different versions of shared libraries and/or require other environment specifics can quickly become a nightmare for the operations team deploying and managing them on production servers.

The more components you need to deploy on the same host, the more difficult it will be to manage all of their dependencies to meet all of their requirements.

FOURNIR UN ENVIRONNEMENT COHÉRENT AUX APPLICATIONS

No matter how many individual components you develop and deploy, one of the biggest issues that developers and operations teams always have to deal with is the differences in the environments in which they run their applications.

Not only is there a huge difference between development and production environments, there are even differences between individual production machines.

Another unavoidable fact is that the environment of a single production machine will change over time (ranging from hardware to operating system to libraries available on each machine).

Production environments are managed by the operations team, while developers often take care of their development laptops alone.

The difference lies in how knowledgeable these two groups of people are about system administration, which naturally leads to relatively large differences between these two environments.

not to mention that system administrators place much more importance on updating the system with the latest security PATCHES, while many developers don't care so much.

Additionally, a production system must provide the correct environment for all of the applications it hosts, even though they may require different or even conflicting versions of the libraries.

To reduce the number of issues that only appear in production, it would be ideal if:

applications can run in the same environment during development and in production so that they have the exact same operating system, libraries, system configuration, network environment and everything else.

You also don't want this environment to change too much over time, if at all

Also, if possible, you want to be able to add applications to the same server without affecting any of the existing applications on that server.

PRESENTATION OF CONTAINER TECHNOLOGIES

- Kubernetes uses Linux container technologies to isolate running applications, so before digging into Kubernetes itself, you should familiarize yourself with the basics of containers to understand what Kubernetes itself does and what it offload to container technologies like Docker or rkt

UNDERSTAND WHAT CONTAINERS ARE

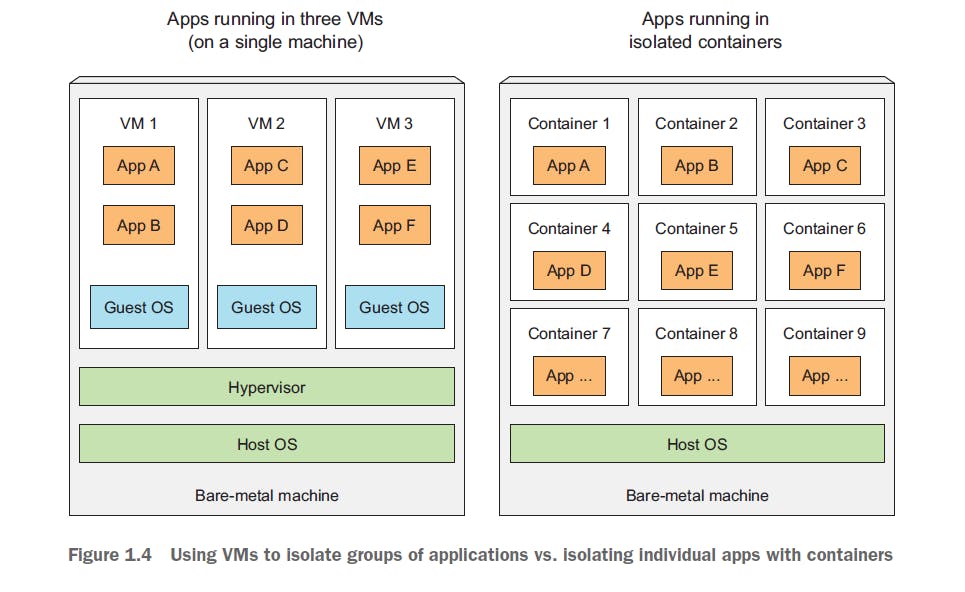

When an application is composed of only a small number of large components, it is perfectly acceptable to give a dedicated virtual machine (VM) to each component and isolate their environments by providing each of them with its own operating system instance.

But when those components start getting smaller and their numbers start increasing, you can't give each of them their own virtual machine if you don't want to waste hardware resources and reduce your hardware costs.

But it's not just about wasting hardware resources. Since each virtual machine usually needs to be configured and managed individually, increasing the number of virtual machines also leads to a waste of human resources, as they greatly increase the workload of system administrators.

COMPONENT ISOLATION WITH LINUX CONTAINER TECHNOLOGIES

- Instead of using virtual machines to isolate environments for each microservice (or software process in general), developers are turning to Linux container technologies.

They allow you to run multiple services on the same host machine, while not only exposing a different environment to each of them, but also isolating them from each other, similar to virtual machines, but with a lot less overhead.

A process running in a container runs inside the host's operating system, like all other processes (unlike virtual machines, where processes run in separate operating systems) .

But the process in the container is still isolated from other processes. For the process itself, it appears to be the only one running on the machine and in its OS.

INTRODUCING THE DOCKER CONTAINER PLATFORM

- Although container technologies have been around for a long time, they have become more widely known with the rise of the Docker container platform. Docker was the first container system to make containers easily portable across different machines. It has simplified the process of packaging not only the application, but all of its libraries and other dependencies, even the entire operating system filesystem, into a simple and portable package that can be used to provision the application on any other machine running Docker.

INTRODUCING KUBERNETES

We have already shown that as the number of deployable application components in your system increases, it becomes more difficult to manage them all.

Google was probably the first company to realize that it needed a much better way to deploy and manage its software components and infrastructure to scale globally.

It is one of the few companies in the world to manage hundreds of thousands of servers and manage deployments on such a scale.

This required them to develop solutions to make the development and deployment of thousands of component software manageable and cost effective.

UNDERSTANDING ITS ORIGINS

Over the years, Google developed an internal system called Borg (and later a new system called Omega), which helped both app developers and system administrators manage those thousands of apps and services. In addition to simplifying development and management, it has also helped them achieve much higher utilization of their infrastructure, which is important when your organization is this large. When you're running hundreds of thousands of machines, even tiny usability improvements mean savings of millions of dollars, so the incentives for developing such a system are clear.

After keeping Borg and Omega secret for an entire decade, in 2014 Google introduced Kubernetes, an open-source system based on experience gained from Borg, Omega, and other internal Google systems.