What is a POD:

A pod is a group of one or more closely related containers that will always run together on the same worker node and in the same Linux namespace(s).

Each Pod is like a separate logical machine with its own IP address, Host, processes, etc., running a single application.

The application can be a single process, running in a single container, or it can be a main application process and an additional support process, each running in its own container.

All containers in a pod will appear to run on the same logical machine, while containers in other pods, even if running on the same worker node, will appear to be running on a different one.

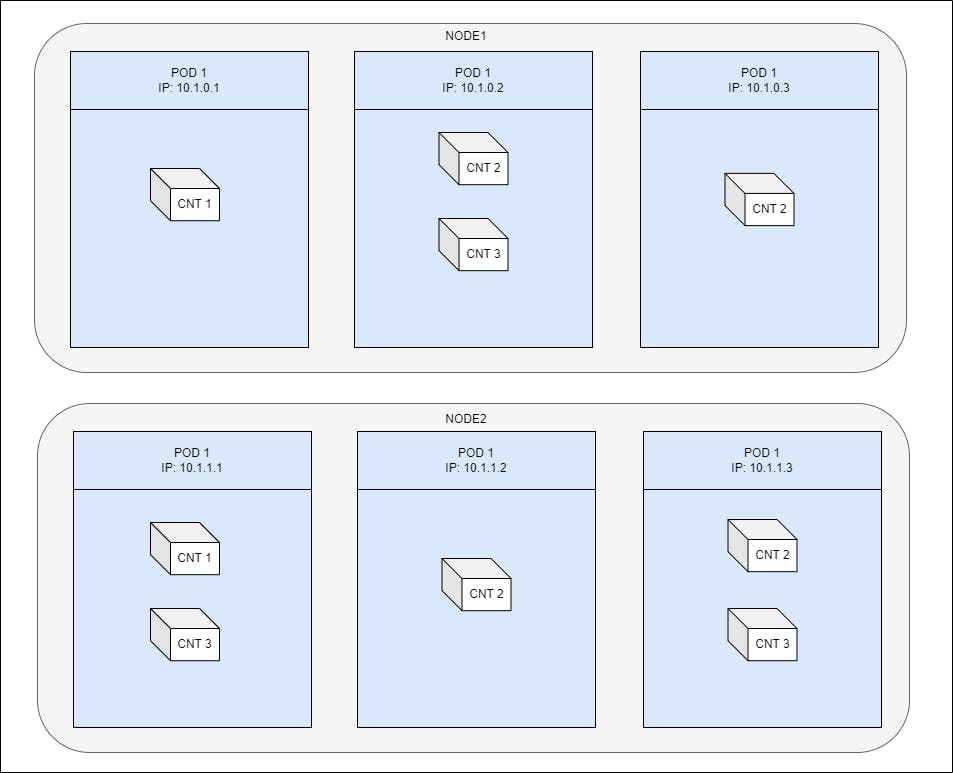

To better understand the relationship between Containers, Pods, and Nodes, consider the following figure:

As you can see, each pod has its own IP address and contains one or more containers, each running an application process. The pods are distributed over different Worker-Nodes.

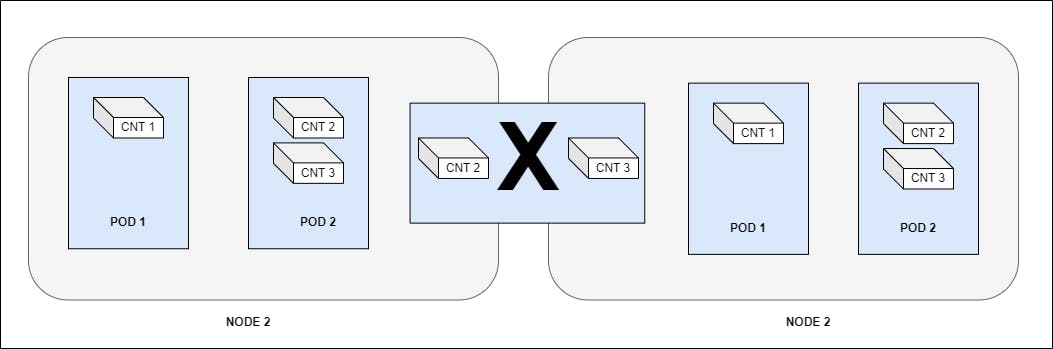

The key thing about pods is that when a pod contains multiple containers, all of them always run on a single Worker-Node, it never spans multiple Worker-Nodes, as shown in the following figure.

Understand why we need pods

But why do we even need pods?

Why can't we use containers directly?

Why would we even need to run multiple containers together?

Can't we put all our processes in one container?

We will answer these questions now:

Imagine an application consisting of multiple processes that communicate via IPC (Inter-Process Communication) or via locally stored files, which forces them to run on the same machine.

Since in Kubernetes you always run processes in containers and each container is very much like an isolated machine, you may think it makes sense to run multiple processes in a single container, but you shouldn't.

Containers are designed to run a single process per container (unless the process itself spawns child processes). If you run multiple unrelated processes in a single container, it is your responsibility to keep all these processes running, manage their logs, etc.

For example, you should include a mechanism for automatically restarting individual processes in the event of a crash.

Also, all of these processes would be logging to the same log, so you would have a hard time determining which process logged what.

Therefore, you must run each process in its own container. This is how Docker and Kubernetes are meant to be used.

Because you're not supposed to pack multiple processes into a single container, obviously you need another abstract construct that will allow you to tie containers together and manage them as a single unit. This is the reasoning behind the pods.

A container pod allows you to run closely related processes together and provide them with (almost) the same environment as if they were all running in a single container, while keeping them virtually isolated. This way you get the best of both worlds. You can take advantage of all the functionality provided by containers, while giving processes the illusion of working together.

INTER-POD FLAT NETWORK OVERVIEW

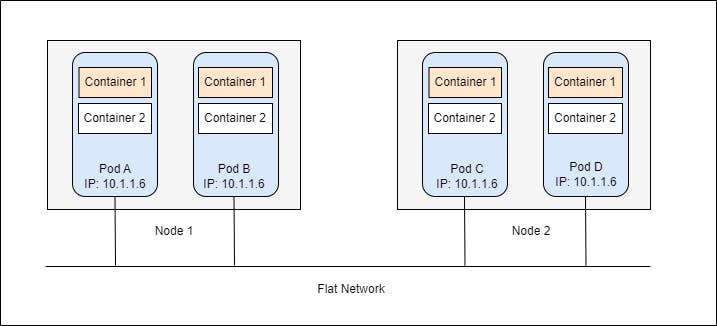

All pods in a Kubernetes cluster reside in a single, flat, shared network address space, which means that each pod can access all other pods at the other pod's IP address. No NAT (Network Address Translation) gateway exists between them. When two pods send network packets to each other, they each see the other's real IP address as the source IP address in the packet.

Therefore, communication between pods is always straightforward. It doesn't matter if two pods are scheduled on one or on different worker nodes; in either case, containers inside these pods can communicate with each other over the flat NATless network, just like computers on a local area network (LAN), regardless of the actual inter-node network topology. Like a computer on a LAN, each Pod gets its own IP address and is accessible from all other Pods through this network established specifically for the Pods. This is usually achieved through an additional software-defined network overlaid on the actual network.

Properly organize containers between pods

- When deciding to place multiple containers in a single pod or in separate pods, you should always ask yourself the following questions:

Do they have to be run together, or can they be run on different hosts?

Do they represent a single whole, or are they independent components?

Should they be scaled together or individually?

- Basically, you should always go for running containers in separate pods, unless there's a specific reason that requires them to be part of the same pod. The following figure will help you remember this.